Apple Researchers Introduce Matryoshka Diffusion Models(MDM): An End-to-End Artificial Intelligence Framework for High-Resolution Image and Video Synthesis

Large Language Models have shown amazing capabilities in recent times. Diffusion models, in particular, have been widely used in a number of generative applications, from 3D modelling and text generation to image and video generation. Though these models cater to various tasks, they encounter significant difficulties when dealing with high-resolution data. It takes a lot of processing power and memory to scale them to high resolution since each step necessitates re-encoding the whole high-resolution input.

Deep architectures with attention blocks are frequently employed to overcome these issues, although they increase computational and memory demands and complicate optimisation. Researchers have been putting in efforts to develop effective network designs for high-resolution photos. The current approaches fall short of standard techniques like DALL-E 2 and IMAGEN in terms of output quality and have not demonstrated competitive results beyond 512×512 resolution.

These widely used techniques reduce computation by fusing many independently trained super-resolution diffusion models with a low-resolution model. Conversely, latent diffusion methods (LDMs) rely on a high-resolution autoencoder that has been individually trained, and they only train low-resolution diffusion models. Both strategies necessitate the use of multi-stage pipelines and meticulous hyperparameter optimisation.

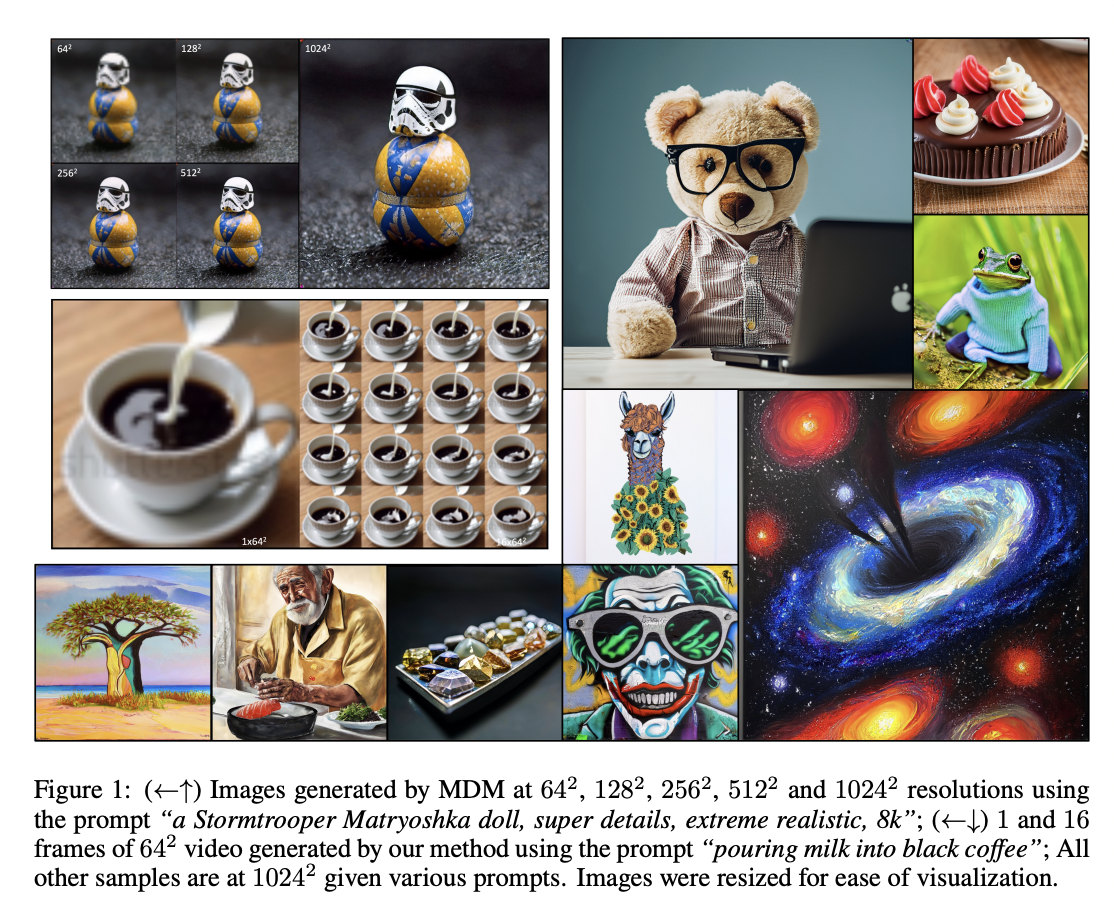

In recent research, a team of researchers from Apple has introduced Matryoshka Diffusion Models (MDM), a family of diffusion models that have been designed for end-to-end high-resolution image and video synthesis. MDM works on the idea of including the low-resolution diffusion process as a crucial component of high-resolution generation. This approach has been inspired by Generative Adversarial Networks (GANs) multi-scale learning, and the team has accomplished this by employing a Nested UNet architecture to carry out a combined diffusion process across several resolutions.

Some of the primary components of this approach are as follows.

- Multi-Resolution Diffusion Process: MDM includes a diffusion process that denoises inputs at several resolutions at once, which implies that it can simultaneously process and produce images with different levels of detail. For this, MDM uses a Nested UNet architecture.

- NestedUNet Architecture: Smaller scale input features and parameters are nested inside larger scale input features and parameters in the Nested UNet architecture. With this nesting, information can be shared effectively across scales, improving the model’s capacity to capture fine features while preserving computational efficiency.

- Progressive Training Plan: MDM presents a training plan that progresses gradually to higher resolutions, beginning at a lesser resolution. By using this training method, the optimisation process is enhanced, and the model is better able to learn how to produce high-resolution content.

The team has shared the performance and efficacy of this approach through a number of benchmark tests, such as text-to-video applications, high-resolution text-to-image production, and class-conditioned picture generation. MDM has demonstrated that it can train a single pixel-space model at up to 1024 × 1024 pixel resolution. Considering that this accomplishment was made using a comparatively small dataset (CC12M), which consists of just 12 million photos, it is extremely remarkable. MDM exhibits robust zero-shot generalisation, which enables it to produce high-quality information for resolutions that it hasn’t been specifically trained on. In conclusion, Matryoshka Diffusion Models (MDM) represents an incredible step forward in the realm of high-resolution image and video synthesis.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.