CMU Researchers Unveil Diffusion-TTA: Elevating Discriminative AI Models with Generative Feedback for Unparalleled Test-Time Adaptation

Diffusion models are used for generating high-quality samples from complex data distributions. Discriminative diffusion models aim to leverage the principles of diffusion models for tasks like classification or regression, where the goal is to predict labels or outputs for a given input data. By leveraging the principles of diffusion models, discriminative diffusion models offer advantages such as better handling of uncertainty, robustness to noise, and the potential to capture complex dependencies within the data.

Generative models can identify anomalies or outliers by quantifying the deviation of a new data point from the learned data distribution. They can distinguish between normal and abnormal data instances, aiding in anomaly detection tasks. Traditionally, these generative and discriminative models are considered as competitive alternatives. Researchers at Carnegie Mellon University couple these two models during the inference stage in a way that leverages the benefits of iterative reasoning of generative inversion and the fitting ability of discriminative models.

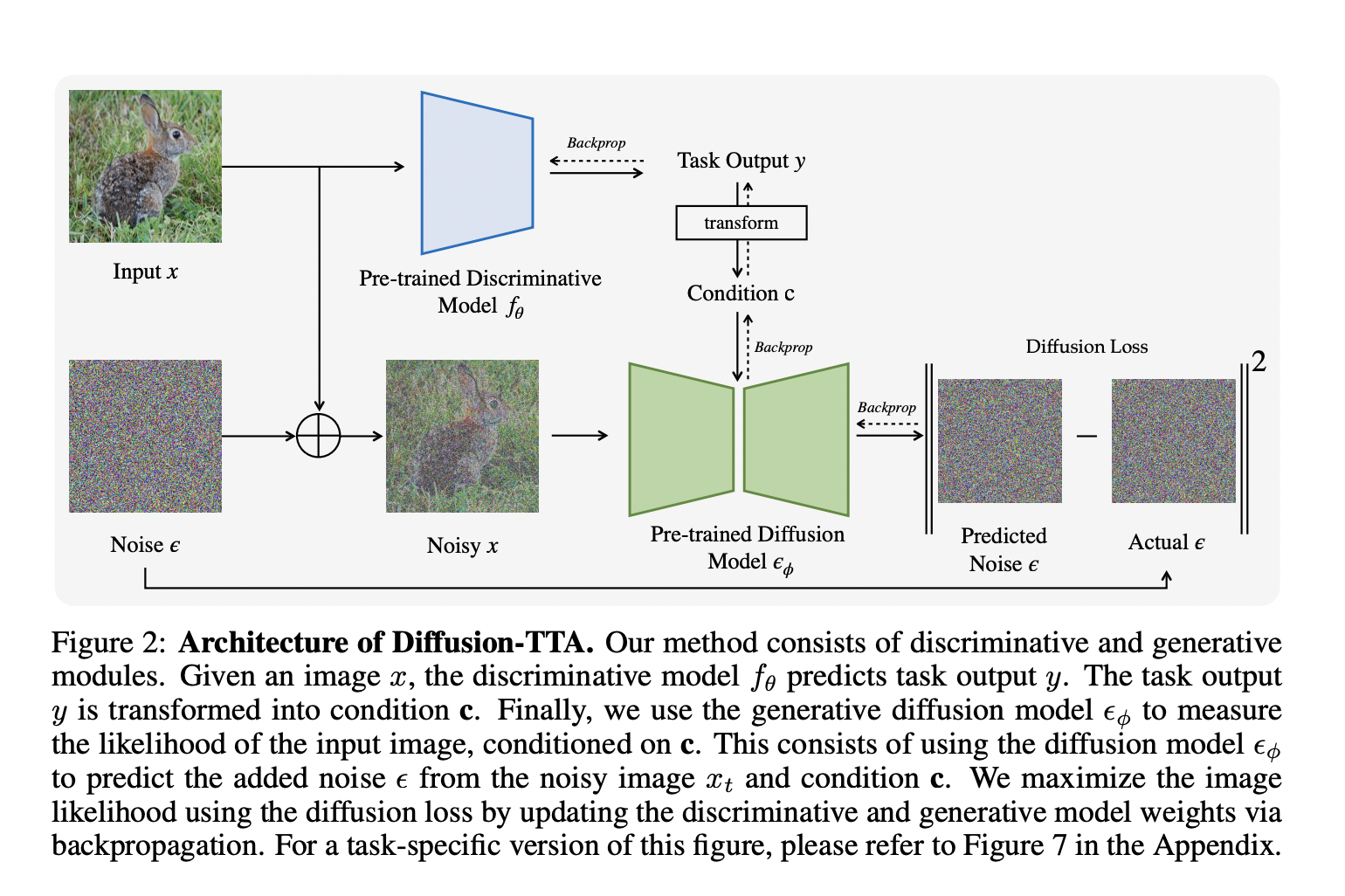

The team built a Diffusion-based Test Time Adaptation (TTA) model that adapts methods from image classifiers, segmenters, and depth predictors to individual unlabelled images by using their outputs to modulate the conditioning of an image diffusion model and maximize the image diffusions. Their model is reminiscent of an encoder-decoder architecture. A pre-trained discriminative model encodes the image into a hypothesis, such as an object category label, segmentation map, or depth map. This is used as conditioning to a pre-trained generative model to generate the image.

Diffusion-TTA effectively adapts image classifiers for in- and out-of-distribution examples across established benchmarks, including ImageNet and its variants. They fine-tune the model using the image reconstruction loss. Adaptation is carried out for each instance in the test set by backpropagating diffusion likelihood gradients to the discriminative model weights. They show that their model outperforms previous state-of-the-art TTA methods and is effective across multiple discriminative and generative diffusion model variants.

Researchers also present an ablative analysis of various design choices and study how Diffusion-TTA varies with hyperparameters such as diffusion timesteps, number of samples per timestep, and batch size. They also learn the effect of adapting different model parameters.

Researchers say Diffusion-TTA consistently outperforms Diffusion Classifier. They conjecture that the discriminative model does not overfit to the generative loss because of the weight initialization of the (pre-trained) discriminative model, which prevents it from converging to this trivial solution.

In conclusion, generative models have previously been used for test time adaptation of image classifiers and segments; by co-training the Diffusion-TTA model under a joint discriminative task loss and a self-supervised image reconstruction loss, users can obtain efficient results.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.