Google Deepmind Research Introduces FunSearch: A New Artificial Intelligence Method to Search for New Solutions in Mathematics and Computer Science

LLMs excel at understanding and generating human-like text, enabling them to comprehend and generate responses that mimic human language, improving communication between machines and humans. These models are versatile and adaptable across diverse tasks, including language translation, summarization, question answering, text generation, sentiment analysis, and more. Their flexibility allows for deployment in various industries and applications.

However, LLMs sometimes hallucinate, resulting in making plausible incorrect statements. Large Language Models like GPT models are highly advanced in language understanding and generation and can still produce confabulations for several reasons. If the input or prompt provided to the model is ambiguous, contradictory, or misleading, the model might generate confabulated responses based on its interpretation of the input.

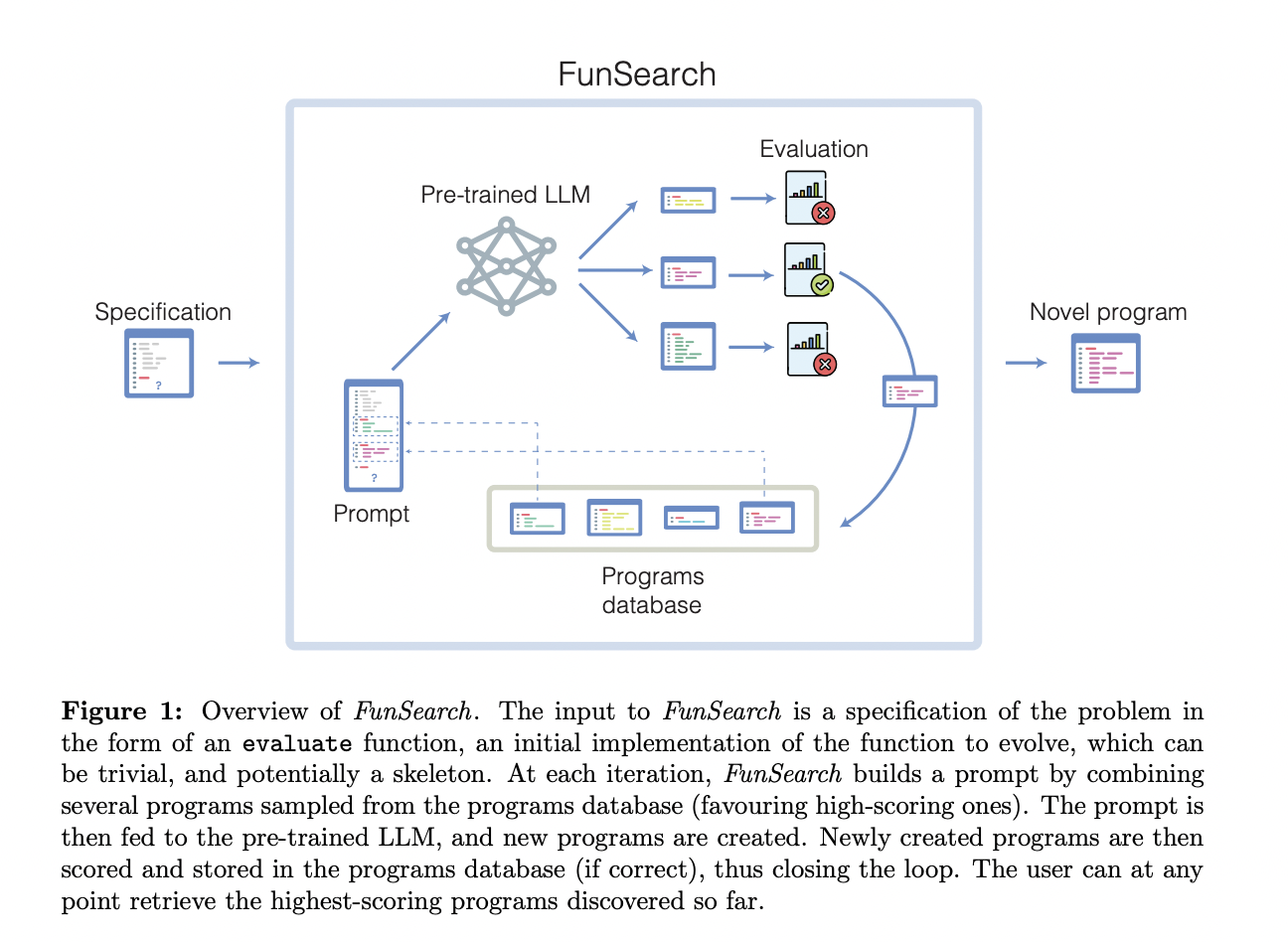

Researchers at Google DeepMind surpass this limitation by proposing a method called FunSearch. It combines a pre-trained LLM with an evaluator, which guards against confabulations and incorrect ideas. FunSearch evolves initial low-scoring programs into high-scoring ones to discover new knowledge by combining multiple essential ingredients. FunSearch produces programs generating the solutions.

FunSearch operates as an iterative process where, in each cycle, the system picks certain programs from the present pool. These selected programs are then processed by an LLM, which innovatively expands upon them, producing fresh programs that undergo automatic evaluation. The most promising ones are reintroduced into the pool of existing programs, establishing a self-enhancing loop.

Researchers sample the better-performing programs and input them back into LLMs as prompts to improve them. They start with an initial program as a skeleton and evolve only the critical program logic governing parts. They set a greedy program skeleton and make decisions by placing a priority function at every step. They use island-based evolutionary methods to maintain a large pool of diverse programs. They scale it asynchronously to broaden the scope of their approach to find new results.

FunSearch uses the same general strategy of bin packing. Instead of packing items into bins with the least capacity, it assigns items to the least capacity only if the fit is very tight after placing the item. This strategy eliminates the small bin gaps that are unlikely to be filled. One of the crucial components of FunSearch is that it operates in the space of programs rather than directly searching for constructions. This gives FunSearch the potential for real-world applications.

Certainly, this marks just the initial phase. FunSearch’s advancement will naturally align with the broader evolution of LLMs. Researchers are committed to expanding its functionalities to tackle various critical scientific and engineering challenges prevalent in society.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 34k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.