This AI Paper Unveils Amazon’s Latest Machine Learning Insights on Buggy-Code in Large Language Models

Programming can be complex, and writing code without errors is sometimes possible. Large language models of code (Code-LLMs) have been developed to help with code completion, but they can sometimes overlook bugs in the code context. To address this issue, researchers from the University of Wisconsin–Madison and Amazon Web Services have conducted a study to improve the performance of LLMs in detecting potential bugs during code generation.

Research in automatic program repair, leveraging Code-LLMs, aims to alleviate the burden of identifying and fixing programming bugs. Similar to adversarial examples in other domains, small semantic-preserving code transformations can degrade the performance of code-learning models. Existing benchmarks like CodeXGLUE, CodeNet, and HumanEval have been pivotal for studying code completion and program repair. To enhance data availability, methods synthesize artificial bugs through code mutants or learn to create bugs.

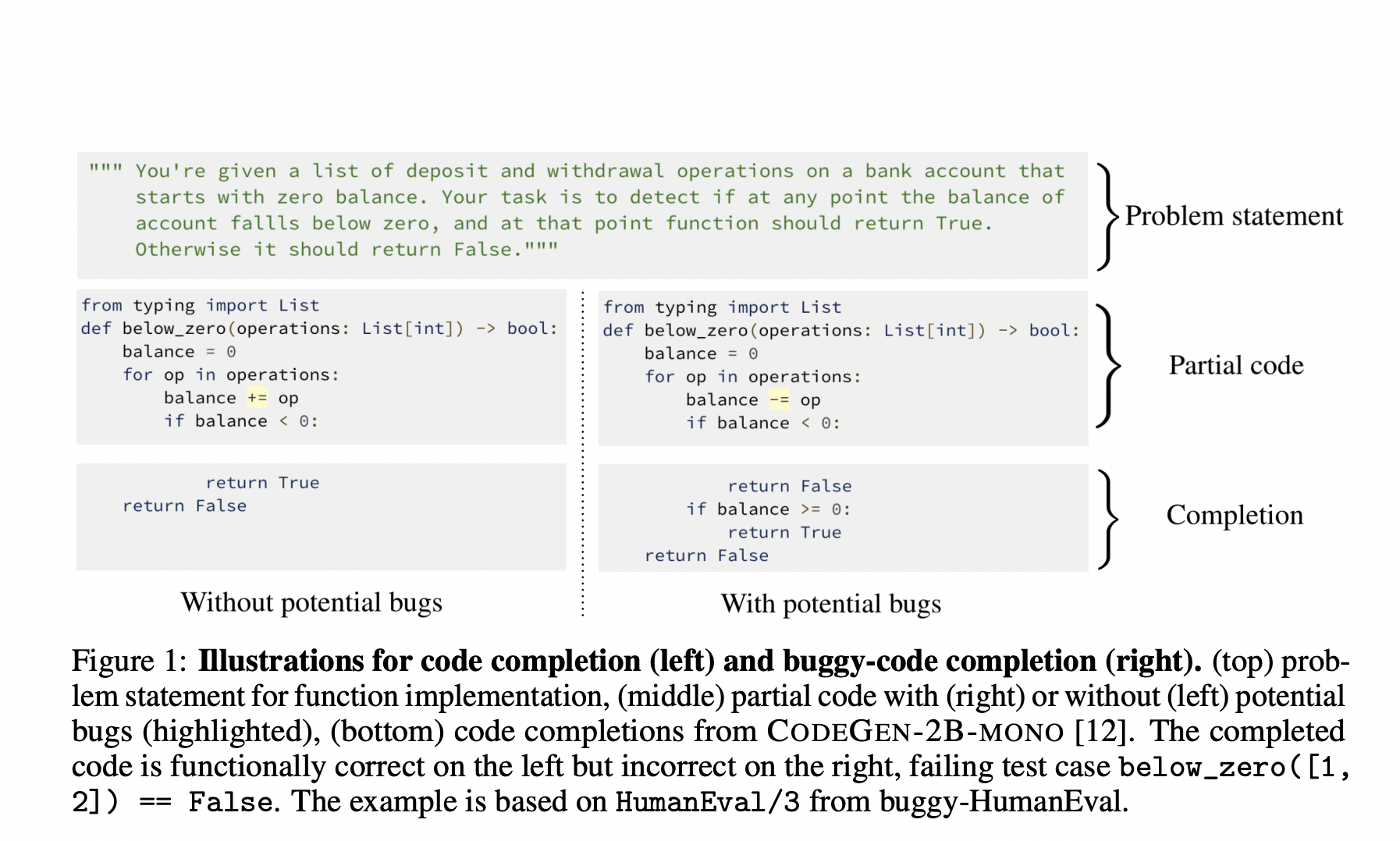

Code completion, a crucial feature in integrated development environments, has seen advancements with Transformer-based language models of code. However, these models often overlook the presence of bugs, a common occurrence in software development. The research introduces the concept of buggy-code completion (bCC), where potential bugs are present in the code context, exploring Code-LLMs’ behavior in such scenarios. Benchmark datasets, buggy-HumanEval and buggy-FixEval, are introduced to evaluate Code-LLMs in the presence of synthetic and realistic bugs, revealing significant performance degradation. Post-mitigation methods are explored to address this issue.

Proposed mitigation methods include Removal-then-completion, eliminating buggy fragments; Completion-then-rewriting, fixing bugs post-completion with models like RealiT; and Rewriting-then-completion, resolving bugs by rewriting code lines before completion. Performance, measured by pass rates, favors Completion-then-rewriting and Rewriting-then-completion. Code-LLMs like RealiT and INCODER-6B function as code fixers, infilling language models in these methods.

The presence of potential bugs substantially degrades Code-LLMs’ generation performance, with over a 50% drop in passing rates for a single bug. With bug location knowledge, the Heuristic Oracle exhibits a notable performance gap between buggy-HumanEval and buggy-FixEval, emphasizing bug location importance. Likelihood-based methods show diverse performance on the two datasets, suggesting bug nature influences aggregation method choice. Post-mitigation methods, including removal-then-completion and rewriting-then-completion, offer performance improvements. Still, a gap exists, indicating the need for further research in enhancing code completion with potential bugs.

In summary, the research conducted can be presented in below points:

- The research introduces a new task called bCC.

- bCC generates functional implementations from a code context with potential bugs.

- The study is evaluated on two datasets named buggy-HumanEval and buggy-FixEval.

- Code-LLMs’ performance degrades significantly, with test-case pass rates dropping below 5%.

- Post-mitigation methods are proposed, including removal-then-completion and rewriting-then-completion, yet performance gaps persist.

- This work enhances the understanding of Code-LLMs in bCC.

- The research suggests ways to improve code completion in the presence of potential bugs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 34k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.